Judea Pearl, recently announced winner of the 2011 Association for Computing Machinery (ACM) A.M. Turing Award for Contributions that Transformed Artificial Intelligence, has been at the forefront of the development of computational intelligence over the last two decades.

Vint Cerf, the chair of the ACM 2012 Turing Centenary Celebration, remarked that Pearl’s accomplishments “have provided the theoretical basis for progress in artificial intelligence and led to extraordinary achievements in machine learning, and they have redefined the term ‘thinking machine.”

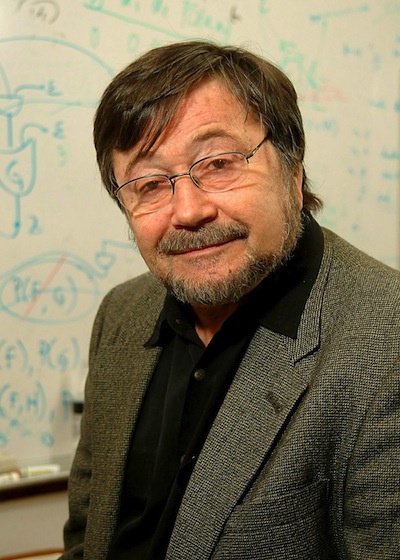

Judea Pearl

Here, Judea talks exclusively about his inspirations and challenges, as well as his thoughts on the future of causality and computational intelligence.

What inspired your initial interest and work in artificial intelligence? Was there any one scientist/philosopher/academic/writer who ignited your interest?

Not really, I don’t know of any person who would not be interested in understanding himself/herself, each with the best tools available to him/her. Religious preachers attempt this understanding through biblical narratives, Descartes tried to do it with mechanical analogies, psychologists with their crude model of the mind and we, computer scientists, with the most powerful symbol-processing mechanism ever available to mankind. And emulating is the key to understanding, because it provides us with the ability to take things apart and examine their behavior under the microscope of novel situations and novel configurations; this is what understanding is all about.

Like any other kid with interest in science I was fascinated by the lives of Archimedes, Galileo, Newton, Faraday and Einstein, not any one in particular. But their influence on me was perhaps especially profound because my science teachers, in Israel, had a unique ability to enliven these legendary figures and give us the illusion that we, too, took part in their discoveries.

What was the greatest challenge you have encountered in your research?

In retrospect, my greatest challenge was to break away from probabilistic thinking and accept, first, that people are not probability thinkers but cause-effect thinkers and, second, that causal thinking cannot be captured in the language of probability; it requires a formal language of its own. I say that it was my “greatest challenge” partly because it took me ten years to make the transition and partly because I see how traumatic this transition is nowadays to colleagues who were trained in standard statistical tradition, including economists, psychologists and health scientists, and these are fields that crave for the transition to happen.

Can you describe a breakthrough moment that inspired the achievements of your work?

I described it in my book “Causality”. The first idea arose in the summer of 1990, while I was working with Tom Verma on “A Theory of Inferred Causation”. We played around with the possibility of replacing conditional probabilities with deterministic functions and, suddenly, everything began to fall into place: we finally had a mathematical object to which we could attribute familiar properties of physical mechanisms and causal relations, instead of those slippery probabilities with which we had been working so long in the study of Bayesian networks.

The second breakthrough came from Peter Spirtes’s lecture at the International Congress of Philosophy of Science (Uppsala, Sweden, 1991). In one of his slides, Peter illustrated how a causal diagram should change when a variable is manipulated. To me, that slide of Spirtes’s—when combined with the deterministic structural equations—was the key to unfolding the manipulative account of causation, then to its counterfactuals account,and then to most other developments I pursued in causal inference.

What is the part of your work and research that you enjoy the most?

Watching my intuition amplified under the microscope of mathematical models, and then seeing how I can do things today that I could not do yesterday.

Causal inference is easy, once you overcome two mental barriers: statistical thinking and traditional probabilistic language.

Your book Causality: Models, Reasoning, and Inference, has been described by the Association for Computing Machinery as being “among the single most influential works in shaping the theory and practice of knowledge-based systems”. What do you think was the most important achievement of your book?

To computer scientists it was perhaps the development of probabilistic graphical models which enabled knowledge-based systems to handle uncertainty coherently, and distributively, while circumventing the combinatorial explosion. This actually started with my earlier book “Probabilistic Reasoning”. To empirical scientists it was the development of graphical causal models, because they permit investigators to articulate causal assumptions transparently, deduce their testable implications, combine them with data and answer difficult research questions that, previously, were at the mercy of folklore and guesswork. They even resolved the notorious Simpson’s paradox and reduced it to an exercise in graph theory.

What is the one book you’d recommend reading? (can be fiction/non-fiction)

Daniel Kahneman’s “Thinking, Fast and Slow,” especially the Chapter: “Causes Trump Statistics”.

Tweet your research/book in no more than 140 characters.

Causal inference is easy, once you overcome two mental barriers: statistical thinking and traditional probabilistic language. In all honesty, there is hardly a causal question that cannot be reduced today to mathematical analysis and machine implementation.

And finally, what is your Desert Island book/play/film/opera/piece of music?

I would take the Old Testament and Mozart’s Requiem, to constantly remind me where I come from and where I am aiming.

Join us for Part Two of this interview with Judea Pearl next week, where he will be talking about the future of causality and computational intelligence. In the meantime, you can discuss Pearl’s work over at our Philosophy Facebook Page.

Latest Comments

Have your say!